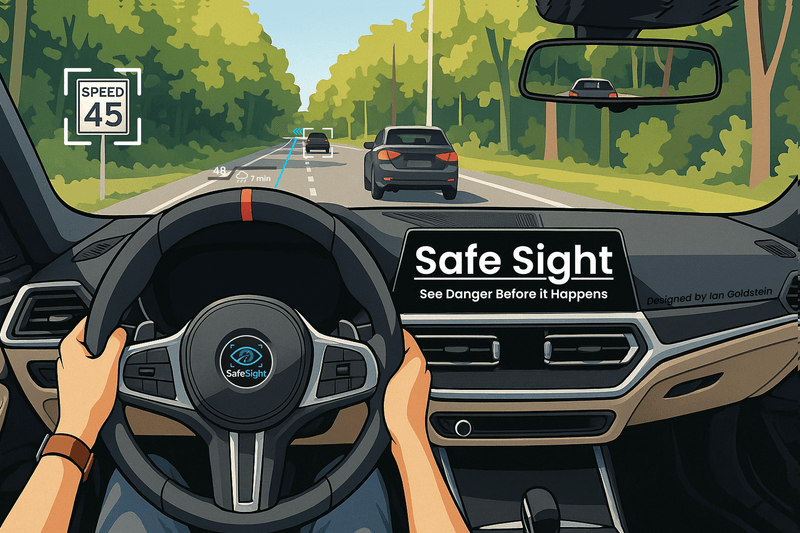

Safe Sight

AR heads-up display for navigating hazardous conditions in vehicles

Duration

16 weeks

Client

Senior Thesis in SMC IxD Program

Role

Researcher Prototyping Designer Coder Presenter

Skills Used

Desktop Research, Concept Posters, Competitive Analysis, Prototyping, C# Coding, Unity Engine Development, Public Exhibition

What is Safe Sight?

Safe Sight is an augmented reality heads-up display that assist drivers in suboptimal weather conditions. The Safe Sight demonstration allows anyone to drive in less-than-optimal conditions while navigating a city through a simulation. The built-in heads-up display projects real-time driving data directly onto the windshield view. My prototype was created within the Unity game engine, completely from scratch. The simulator uses an interactive steering wheel that allows drivers to adjust the information they see, along with pedals to create a fully immersive experience. The stormy city environment was chosen to simulate a very common hazard and show how visibility and reaction time can be supported through thoughtful interface design. The goal of Safe Sight is to enhance the driver's attention, especially in the moments when stress or uncertainty is at its peak. This project shows how design and technology can work together to create safer roads, not just through automation, but by empowering people with smarter, more human-centered tools. Whether you're an experienced driver or just getting behind the wheel, Safe Sight helps you see the danger before it happens.

PROBLEM

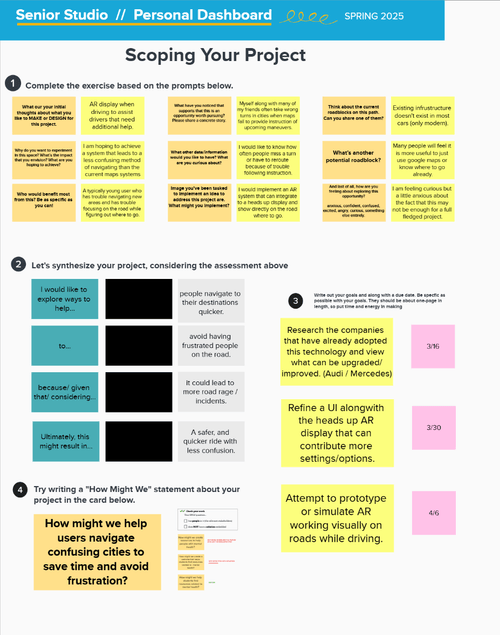

Driving in new city environments, especially during dangerous weather conditions, poses the risk of accidents due to poor visibility, unclear navigation, and cognitive overload. Road accidents cause 1.3 million deaths every year globally, many of which are preventable. Through research, I discovered that weather alone increases the risk of a fatal crash by 34% on average. Even with such a real issue, many vehicle systems don't provide real-time visual tools to guide drivers safely. Initially, my idea was to develop an AR assistant for frustrated drivers to navigate traffic, but it lacked a clear problem focus. After scoping my project, I realized the issue wasn't just stress; it was disorientation in unfamiliar or hazardous environments. One major challenge was finding a way to communicate safe information without overwhelming the driver. I ultimately ended up creating an immersive simulation environment where users could safely test and experience these high-stakes situations firsthand.

INSIGHT

Through research, testing, and development, I uncovered several key insights. Firstly, AR elements need to follow a strong visual hierarchy to prevent distraction and avoid cognitive overload on drivers. Next, weather is a critical safety factor that is often ignored in vehicle interfaces. Beyond telling you the weather, they don't do much to assist drivers. And finally, creating an interactive simulation is much more effective than static mockups for user testing. My process included conducting desktop research into AR in the current automotive world, along with accidents that could've been prevented. I found other companies, such as BMW and Audi, working on heads-up displays, but focusing on other aspects besides bad weather. I then user tested with a mock video simulation and steering wheel, whose feedback led to creating my design system. This design system had structured colors, typography, and set layouts. Each phase revealed more insights into what real drivers were needed, ultimately resulting in a simulator being created.

SOLUTION

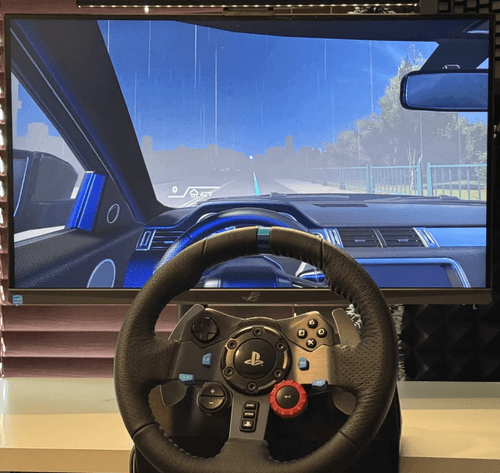

The final product is Safe Sight, an augmented reality heads-up display created in a custom-built Unity driving simulator. It helps drivers "see the danger before it happens" by providing projected route lines, speed tracking, turn-by-turn AR navigation, and real-time hazard alerts. All of which is overlaid directly onto the road in front of the driver. The simulator is controlled with a Logitech G29 steering wheel and pedals, allowing users to drive through a realistic city environment during heavy rain or storms. Buttons on the wheel allow for customizable HUD interactions, such as toggling between views or scrolling data. The experience shows how a clear, modular AR system can reduce driver confusion, especially in poor weather. The final interface used the design system in full. Future possibilities for Safe Sight could be creating a modular design that can be fitted to any car, along with turning the simulator into a configurable program that companies can adopt to test their own products.

Deciding My Project

Since childhood, I've had a deep love for cars, and I knew I wanted to do something that aligned with that passion. Initially, I explored various pain points related to driving, particularly the frustration and inefficiency of navigating dense urban environments. My early idea was to create an AR assistant to help stressed drivers, but this felt too vague and didn't address a concrete safety issue.

Through research, I identified that a major challenge drivers face is understanding how to safely navigate confusing or dangerous cities, especially during adverse conditions. This pivot led me to explore augmented reality as a way to bring visual clarity and intuitive feedback to the driving experience. What started as a mock-up video evolved into a full-fledged interactive simulator.

Conducting My First Experiment

I created prototype videos simulating AR HUD elements over real driving footage and placed participants in front of a fake steering wheel. They were instructed to "drive" and respond as if the simulation were real. This simple test revealed two key insights:

- A strong visual design system was necessary to keep interface elements readable and consistent.

- The interface needed clear hierarchy to prevent cognitive overload and ensure quick reaction times.

These findings shaped the foundation for all design decisions going forward.

Researching Competitor Companies

I examined existing AR HUD solutions from brands like BMW and Audi:

- BMW integrates route setting with voice assistants, displaying navigation directly on the windshield to reduce cognitive load.

- Audi emphasizes driver-centric safety alerts in the HUD, ensuring minimal eye movement away from the road.

However, both remain limited to high-end vehicle ecosystems and lack modular or testable platforms. This gap confirmed the need for an accessible, interactive simulator that could prototype AR systems independently of luxury car infrastructures.

Creating The Simulator

Building the simulator required a complete dive into Unity3D and C# programming. I constructed a realistic, controllable vehicle using a Logitech G29 steering wheel and pedals. Key features include:

- Dynamic weather simulation (e.g., thunderstorms)

- AR HUD overlays including turn-by-turn arrows, projected road paths, speed indicators, and hazard warnings

- Button-controlled HUD customization on the steering wheel

- Modular design system for UI elements using best practices in contrast, visibility, and information hierarchy

This phase pushed me to rapidly gain new technical skills and create a repeatable user experience without requiring in-person facilitation.

Safe Sight Unity Driving Experience

Grad Show Exhibition

Having people experience my simulator was one of the greatest feelings a designer can have. Knowing people are enjoying and using something I worked so hard on was great. Beyond all of that, having dozens of people gather around at one time to watch people try the simulator was incredible. I had amazing feedback as well about how each person viewed the experience. It is so eye-opening to understand that everyone has a different background and that comes with different opinions, which means no design can ever be a one-size-fits-all solution. Many people shared the remark of feeling that the HUD would be more of a distraction than a solution, which is completely valid. This also aligned with most of the people who have never used a HUD before, whereas the ones who had used them before said they enjoyed the additions to the car and would use it in real life. It could be a correlation, but either way, I was glad people had critical feedback to give; that is a very crucial part of the design process.

My Reflection

This entire project has been the light of my senior year in the Interaction Design program. When we were first told about the thesis, I immediately knew I wanted to work with cars. Safe Sight ended up being one of the most challenging and rewarding projects I've ever taken on, especially with this being my first solo academic project. This project pushed me to learn entirely new tools, from building a simulator in Unity to coding in C#. It also deepened my understanding of UX principles, AR design, and user safety. Through user testing, research, and hands-on experimentation, I saw how powerful design systems can be in balancing technically complex systems with human-centric design. Ultimately, Safe Sight helped me grow not just as a designer but as a problem-solver overall.